公众号

前言

论文地址:https://arxiv.org/pdf/1601.02376.pdf

论文开源代码(基于Theano实现):https://github.com/wnzhang/deep-ctr

参考代码(无FM初始化):https://github.com/Sherryuu/CTR-of-deep-learning

重构代码:https://github.com/wyl6/Recommender-Systems-Samples/tree/master/RecSys%20And%20Deep%20Learning/DNN/fnn

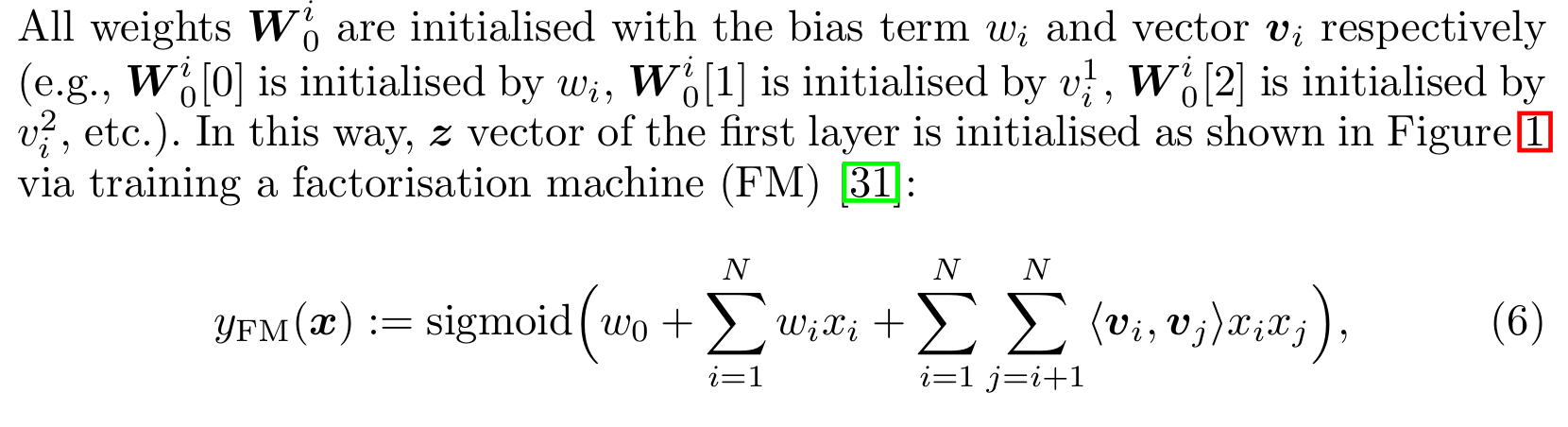

FNN = FM+MLP

FM在到底初始化什么

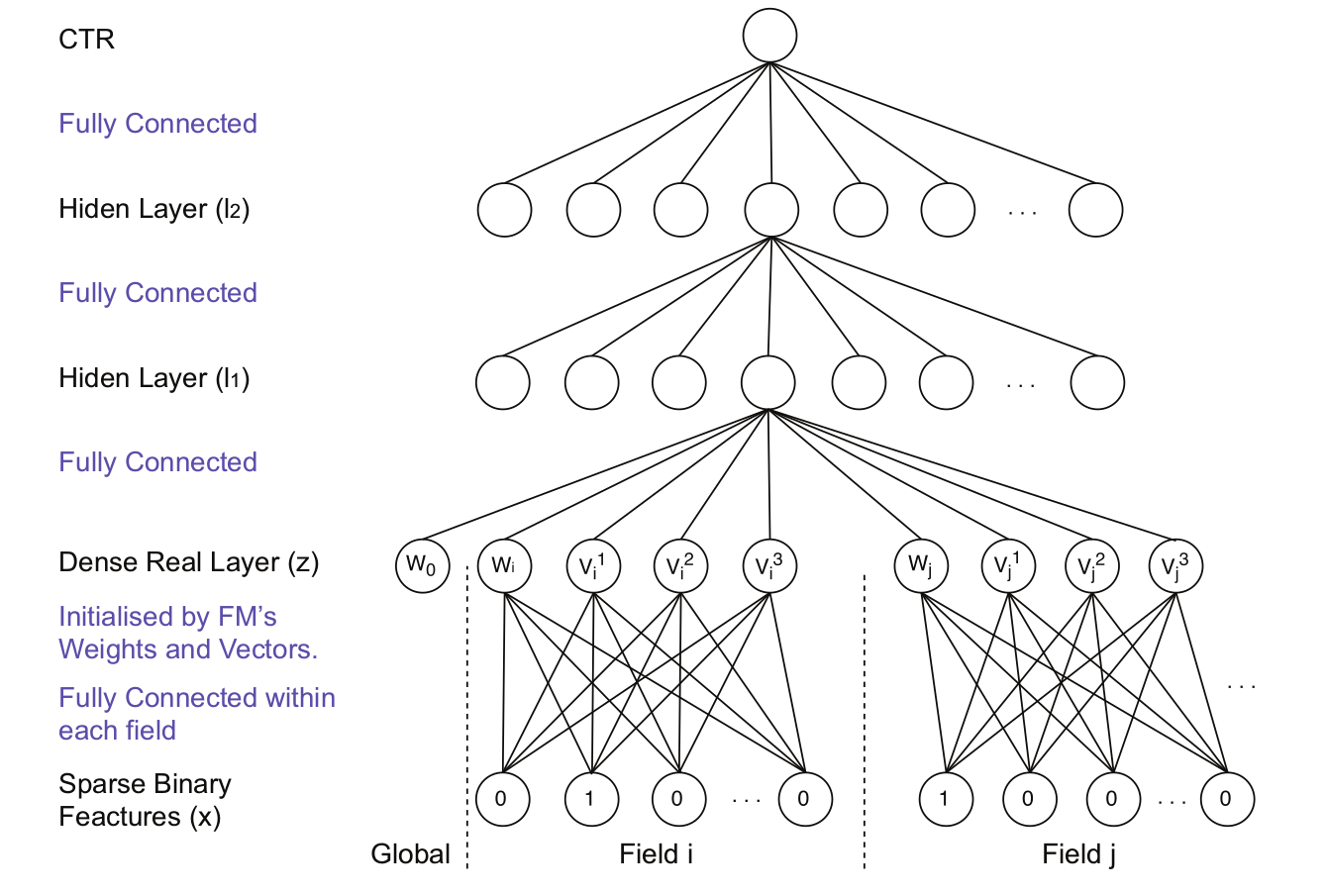

FNN首先使用FM初始化输入embedding层,然后使用MLP来进行CTR预估,具体怎么做的呢?看论文中的一张图:

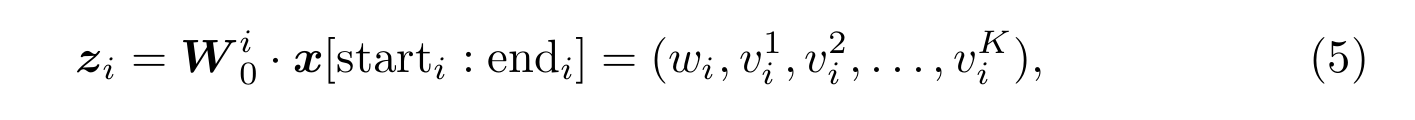

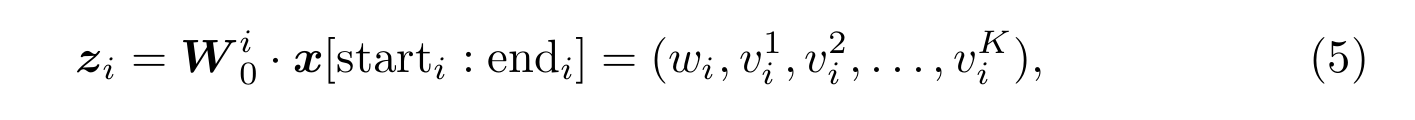

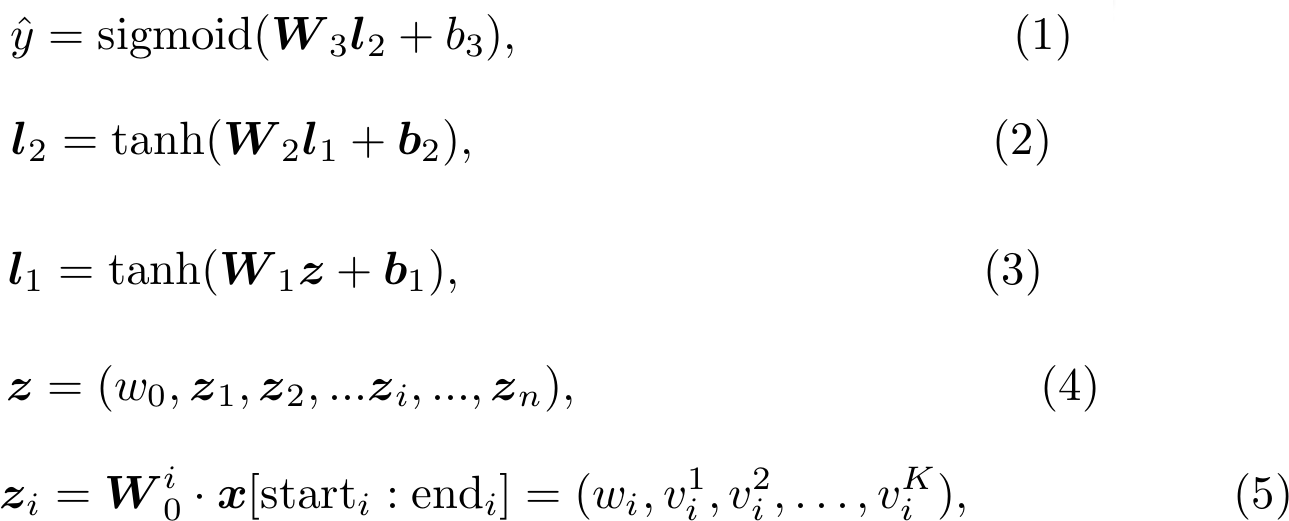

单看图来理解的有一定的迷惑性,加上z的输出结公式就更有迷惑性了:

其中wi为第i个field经FM初始化得到的一次项系数,vi就是隐向量,K为隐向量vi的维度.如果初始化的是z,那Dense Real Layer显示的结果显示每个field只有1个wi和vi,这不对啊,之前看FM的时候每个field的每个特征都对应一个wi和vi,这是怎么回事呢?实际上,FM初始化的是系数向量x到dense layer之间的权重矩阵W:

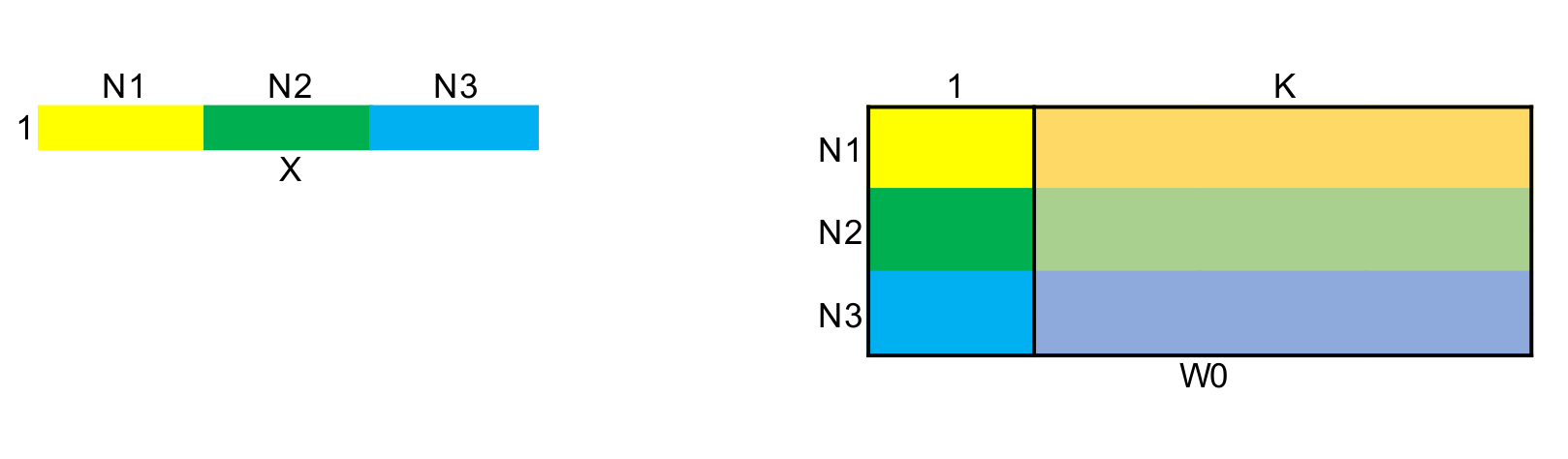

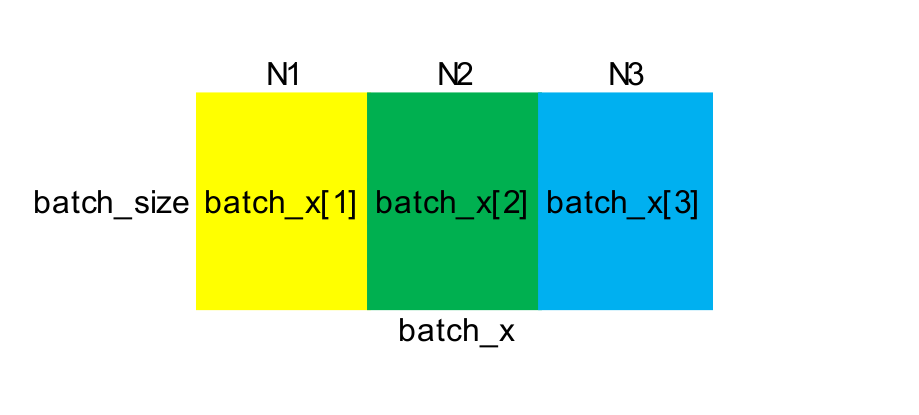

我给大家画张图,假设样本有3个field,3个field维度分别为N1,N2,N3,那我们经过FM初始化可以获得N=N1+N2+N3个隐向量和一次项系数w,用它们组成权重矩阵W0:

但是作者并没有直接将x和权重矩阵相乘来计算z,这样计算出的结果是K+1维,相当于把样本的所有非零特征对应的K+1维向量加起来.降维太过了,数据压缩太厉害总会损失一部分信息,因此作者将每个field分别相乘得到K+1维结果,最后把所有field的结果串联起来:

这样初始化时,由于样本每个field只有一个非零值,第i个field得到的z值就是非零特征对应的w和v:

FNN的流程

了解FM初始化的是权重矩阵W0后,FNN流程就清楚了,从后往前看,一步到位:

代码实战

数据格式

数据共有22个field,各field中属性取值的可枚举个数为:

2

3

2

3

样本x则被划分为:

由于是模拟CTR预估,所以标签y是二分类的,实验中y∈{0,1}.

参数保存与加载

FNN源代码中没有FM初始化这部分,只有MLP,博主自己加上了.

参数保存,常见的就是使用tf.train.Saver.保存所有模型的参数和值,然后加载部分参数或全部参数;或者保存指定参数和参数值,然后加载想要的参数和参数的值.为了和源代码借口保持一致,我们并没有使用tf.train.Saver,而是直接获取参数值,构造一个字典,保存到本地:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

2# weight = [self.vars['w'], self.vars['v'], self.vars['b']]

3# saver = tf.train.Saver(weight)

4# saver.save(self.sess, model_path)

5# print(self.sess.run(self.vars['w']))

6# print(self.sess.run('w:0'))

7# print(self.vars['w'])

8# for i,j in self.vars.items():

9# print(i, j)

10# print(self.sess.run(j))

11 var_map = {}

12 for name, var in self.vars.items():

13 print('----------------',name, var)

14 var_map[name] = self.sess.run(var)

15 pkl.dump(var_map, open(model_path, 'wb'))

16 print('model dumped at', model_path)

17 load_var_map = pkl.load(open(model_path, 'rb'))

18 print('load_var_map[w]', load_var_map['w'])

19

20

pkl.dump可以保存多种类型的数据,用pkl.load加载,下面是加载的部分:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

2 init_vars.append(('w', [feature_size, 1], 'fm', dtype))

3 init_vars.append(('v', [feature_size, embed_size], 'fm', dtype))

4 init_vars.append(('b', [1, ], 'fm', dtype))

5

6 self.vars = utils.init_var_map(init_vars, init_path)

7 init_w0 = tf.concat([self.vars['w'],self.vars['v']], 1)

8 lower, upper = 0, field_sizes[0]

9 for i in range(num_inputs):

10 if(i != 0):

11 lower, upper = upper, upper+field_sizes[i]

12 self.vars['embed_%d' % i] = init_w0[lower:upper]

13 w0 = [self.vars['embed_%d' % i] for i in range(num_inputs)]

14

15

其中的init_var_map函数如下:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

2 if init_path is not None:

3 load_var_map = pkl.load(open(init_path, 'rb'))

4 print('load variable map from', init_path, load_var_map.keys())

5 var_map = {}

6 for var_name, var_shape, init_method, dtype in init_vars:

7 if init_method == 'zero':

8 var_map[var_name] = tf.Variable(tf.zeros(var_shape, dtype=dtype), name=var_name, dtype=dtype)

9 elif init_method == 'one':

10 var_map[var_name] = tf.Variable(tf.ones(var_shape, dtype=dtype), name=var_name, dtype=dtype)

11 elif init_method == 'normal':

12 var_map[var_name] = tf.Variable(tf.random_normal(var_shape, mean=0.0, stddev=STDDEV, dtype=dtype),

13 name=var_name, dtype=dtype)

14 elif init_method == 'tnormal':

15 var_map[var_name] = tf.Variable(tf.truncated_normal(var_shape, mean=0.0, stddev=STDDEV, dtype=dtype),

16 name=var_name, dtype=dtype)

17 elif init_method == 'uniform':

18 var_map[var_name] = tf.Variable(tf.random_uniform(var_shape, minval=MINVAL, maxval=MAXVAL, dtype=dtype),

19 name=var_name, dtype=dtype)

20 elif init_method == 'xavier':

21 maxval = np.sqrt(6. / np.sum(var_shape))

22 minval = -maxval

23 value = tf.random_uniform(var_shape, minval=minval, maxval=maxval, dtype=dtype)

24 var_map[var_name] = tf.Variable(value, name=var_name, dtype=dtype)

25 elif isinstance(init_method, int) or isinstance(init_method, float):

26 var_map[var_name] = tf.Variable(tf.ones(var_shape, dtype=dtype) * init_method, name=var_name, dtype=dtype)

27 elif init_method == 'fm':

28 var_map[var_name] = tf.Variable(load_var_map[var_name], name=var_name, dtype=dtype)

29 else:

30 print('BadParam: init method', init_method)

31 return var_map

32

33

模型如何使用

调试的过程如下,首先设置algo='fm',获得一次项系数w和隐向量v,保存参数;然后algo='fnn',进行CTR预测.

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

2algo = 'fnn'

3if algo in {'fnn','anfm','amlp','ccpm','pnn1','pnn2'}:

4 train_data = utils.split_data(train_data)

5 test_data = utils.split_data(test_data)

6 tmp = []

7 for x in field_sizes:

8 if x > 0:

9 tmp.append(x)

10 field_sizes = tmp

11 print('remove empty fields', field_sizes)

12

13if algo == 'fm':

14 fm_params = {

15 'input_dim': input_dim,

16 'factor_order': 128,

17 'opt_algo': 'gd',

18 'learning_rate': 0.1,

19 'l2_w': 0,

20 'l2_v': 0,

21 }

22 print(fm_params)

23 model = FM(**fm_params)

24elif algo == 'fnn':

25 fnn_params = {

26 'field_sizes': field_sizes,

27 'embed_size': 129,

28 'layer_sizes': [500, 1],

29 'layer_acts': ['relu', None],

30 'drop_out': [0, 0],

31 'opt_algo': 'gd',

32 'learning_rate': 0.1,

33 'embed_l2': 0,

34 'layer_l2': [0, 0],

35 'random_seed': 0,

36 'init_path':pkl_path,

37 }

38 print(fnn_params)

39 model = FNN(**fnn_params)

40

41

运行结果

FNN使用‘Xavier’初始化时:

2

3

4

2 init_vars.append(('embed_%d' % i, [field_sizes[i], embed_size], 'xavier', dtype))

3

4

运行10次效果为:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

2[0] evaluating...

3[0] loss (with l2 norm):0.358097 train-auc: 0.610657 eval-auc: 0.661392

4[1] training...

5[1] evaluating...

6[1] loss (with l2 norm):0.350506 train-auc: 0.624879 eval-auc: 0.679986

7[2] training...

8[2] evaluating...

9[2] loss (with l2 norm):0.348581 train-auc: 0.631834 eval-auc: 0.688470

10[3] training...

11[3] evaluating...

12[3] loss (with l2 norm):0.347268 train-auc: 0.637031 eval-auc: 0.694607

13[4] training...

14[4] evaluating...

15[4] loss (with l2 norm):0.346279 train-auc: 0.641287 eval-auc: 0.699670

16[5] training...

17[5] evaluating...

18[5] loss (with l2 norm):0.345490 train-auc: 0.644798 eval-auc: 0.703892

19[6] training...

20[6] evaluating...

21[6] loss (with l2 norm):0.344828 train-auc: 0.647727 eval-auc: 0.707407

22[7] training...

23[7] evaluating...

24[7] loss (with l2 norm):0.344262 train-auc: 0.650155 eval-auc: 0.710297

25[8] training...

26[8] evaluating...

27[8] loss (with l2 norm):0.343769 train-auc: 0.652261 eval-auc: 0.712707

28[9] training...

29[9] evaluating...

30[9] loss (with l2 norm):0.343332 train-auc: 0.654116 eval-auc: 0.714787

31

32

FM迭代50次后初始化:FNN运行结果为:

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

2[0] evaluating...

3[0] loss (with l2 norm):0.361066 train-auc: 0.607293 eval-auc: 0.642668

4[1] training...

5[1] evaluating...

6[1] loss (with l2 norm):0.353281 train-auc: 0.634517 eval-auc: 0.679833

7[2] training...

8[2] evaluating...

9[2] loss (with l2 norm):0.350498 train-auc: 0.640884 eval-auc: 0.688085

10[3] training...

11[3] evaluating...

12[3] loss (with l2 norm):0.347988 train-auc: 0.648423 eval-auc: 0.696806

13[4] training...

14[4] evaluating...

15[4] loss (with l2 norm):0.345739 train-auc: 0.657166 eval-auc: 0.706803

16[5] training...

17[5] evaluating...

18[5] loss (with l2 norm):0.343678 train-auc: 0.665929 eval-auc: 0.716429

19[6] training...

20[6] evaluating...

21[6] loss (with l2 norm):0.341738 train-auc: 0.674693 eval-auc: 0.725318

22[7] training...

23[7] evaluating...

24[7] loss (with l2 norm):0.339869 train-auc: 0.682893 eval-auc: 0.733139

25[8] training...

26[8] evaluating...

27[8] loss (with l2 norm):0.338055 train-auc: 0.690134 eval-auc: 0.739590

28[9] training...

29[9] evaluating...

30[9] loss (with l2 norm):0.336269 train-auc: 0.696557 eval-auc: 0.744801

31

32

auc值变大,明显得到改善。

参考代码(无FM初始化):https://github.com/Sherryuu/CTR-of-deep-learning

重构代码:https://github.com/wyl6/Recommender-Systems-Samples/tree/master/RecSys%20And%20Deep%20Learning/DNN/fnn