镜像环境:centos7_64位,可去官网进行下载

hadoop版本:hadoop-2.8.5,可去官网下载

安装hadoop的前提必须要安装jdk,这个很简单的,不易细说jdk的安装。

一、hadoop下载,我这里下载的是hadoop-2.8.5.tar.gz

二、hadoop安装

(1)、在虚拟机下新建目录soft,命令:mkdir soft

(2)、将下载的hadoop上传到虚拟机下,可使用工具Filezilla进行上传

2

3

4

5

6

7

8

9

10

2Hadoop 2.8.5

3Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 0b8464d75227fcee2c6e7f2410377b3d53d3d5f8

4Compiled by jdu on 2018-09-10T03:32Z

5Compiled with protoc 2.5.0

6From source with checksum 9942ca5c745417c14e318835f420733

7This command was run using /usr/local/hadoop-2.8.5/share/hadoop/common/hadoop-common-2.8.5.jar

8[root@cluster-3 ~]#

9

10

(3)、解压hadoop到/usr/local/目录下,解压命令为

2

3

4

2

3tar -zxvf hadoop-2.8.5.tar.gz -C /usr/local/

4

(4)、将hadoop环境配到系统环境变量中,命令:vi /etc/profile,然后输入i进行编辑模式,填写以下内容即可

2

3

4

5

6

7

8

2export JAVA_HOME=/usr/local/jdk

3export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

4export PATH=$JAVA_HOME/bin:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

5export PATH=$PATH:/soft/mysql/bin

6export CATALINA_HOME=/usr/local/tomcat

7

8

按ESC,输入:wq进行保存,

最后保证系统环境变量配置有效,输入source /etc/profile

(5)经过以上保证了hadoop安装成功,可通过命令:hadoop version查看版本,若安装成功,会如下所示:

2

3

4

5

6

7

8

9

10

2Hadoop 2.8.5

3Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 0b8464d75227fcee2c6e7f2410377b3d53d3d5f8

4Compiled by jdu on 2018-09-10T03:32Z

5Compiled with protoc 2.5.0

6From source with checksum 9942ca5c745417c14e318835f420733

7This command was run using /usr/local/hadoop-2.8.5/share/hadoop/common/hadoop-common-2.8.5.jar

8[root@cluster-3 ~]#

9

10

三、hadoop的配置(只需要配置5个文件)

以下3个配置是非主要配置:

(1)修改主机名(我的主机名为:cluster-3)

命令:vi /etc/sysconfig/network,添加如下信息

2

3

4

2HOSTNAME=cluster-3

3

4

(2)将集群加入配置中,命令: vi /etc/hosts ,如下所示,有多少个集群,就在这里进行添加ip和主机名即可

2

3

4

5

6

2::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

3192.168.79.130 cluster-3

4192.168.79.131 cluster-1

5

6

(3)在windows下进行hosts文件的编辑,找到C:\Windows\System32\drivers\etc,以管理员方式打开hosts文件,进行编辑,将IP和主机名进行添加,如下所示:

2

3

4

5

6

7

2127.0.0.1 localhost

3::1 localhost

4192.168.79.132 weekend110

5192.168.79.131 cluster-1

6192.168.79.130 cluster-3

7

以下5个是hadoop的主要配置文件,cd /usr/local/hadoop-2.8.5/etc/hadoop 目录下进行配置

(1)hadoop-env.sh文件配置,把jdk的环境加入,系统默认是{JAVA_HOME},这里把他写死

2

2

(2)core-site.xml文件配置,

在hadoop-2.8.5下新建目录data存储临时文件

2

3

4

5

6

7

8

9

10

11

12

13

14

2

3<property>

4<name>fs.defaultFS</name>

5<value>hdfs://cluster-3:9000</value> //主机名和端口号

6</property>

7<!-- 指定hadoop运行时产生文件的存储目录 -->

8<property>

9<name>hadoop.tmp.dir</name>

10<value>/usr/local/hadoop-2.8.5/data</value>

11</property>

12</configuration>

13

14

(3)hdfs-site.xml文件配置

2

3

4

5

6

7

8

2<property>

3<name>dfs.replication</name>

4<value>1</value> //HDF副本数,这里看有几台机器

5</property>

6</configuration>

7

8

(4)mapred-site.xml文件配置

将mapred-site.xml.template修改为 mapred-site.xml,命令:mv mapred-site.xml.template mapred-site.xml

2

3

4

5

6

2 <property>

3 <name>mapreduce.framework.name</name>

4 <value>yarn</value>

5 </property>

6

(5)yarn-site.xml文件配置

2

3

4

5

6

7

8

9

10

11

2<property>

3 <name>yarn.resourcemanager.hostname</name>

4 <value>cluster-3</value> //主机名

5</property>

6<!-- reducer获取数据的方式 -->

7<property>

8 <name>yarn.nodemanager.aux-services</name>

9 <value>mapreduce_shuffle</value>

10</property>

11

四、启动hadoop集群

(1)格式化namenode(是对namenode进行初始化)

命令: hdfs namenode -format (hadoop namenode -format)

(2)启动hadoop(也可以使用命令:start-all.sh启动hdfs和yarn)

先启动HDFS,命令:start-dfs.sh

再启动yarn,命令:stadrt-yarn.sh

(3)验证是否启动成功,使用命令:jps

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

2This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

3Starting namenodes on [cluster-3]

4cluster-3: starting namenode, logging to /usr/local/hadoop-2.8.5/logs/hadoop-root-namenode-cluster-3.out

5localhost: starting datanode, logging to /usr/local/hadoop-2.8.5/logs/hadoop-root-datanode-cluster-3.out

6Starting secondary namenodes [0.0.0.0]

70.0.0.0: starting secondarynamenode, logging to /usr/local/hadoop-2.8.5/logs/hadoop-root-secondarynamenode-cluster-3.out

8starting yarn daemons

9starting resourcemanager, logging to /usr/local/hadoop-2.8.5/logs/yarn-root-resourcemanager-cluster-3.out

10localhost: starting nodemanager, logging to /usr/local/hadoop-2.8.5/logs/yarn-root-nodemanager-cluster-3.out

11[root@cluster-3 hadoop]# jps

129108 DataNode

139828 Jps

149413 ResourceManager

159261 SecondaryNameNode

169535 NodeManager

17[root@cluster-3 hadoop]#

18

19

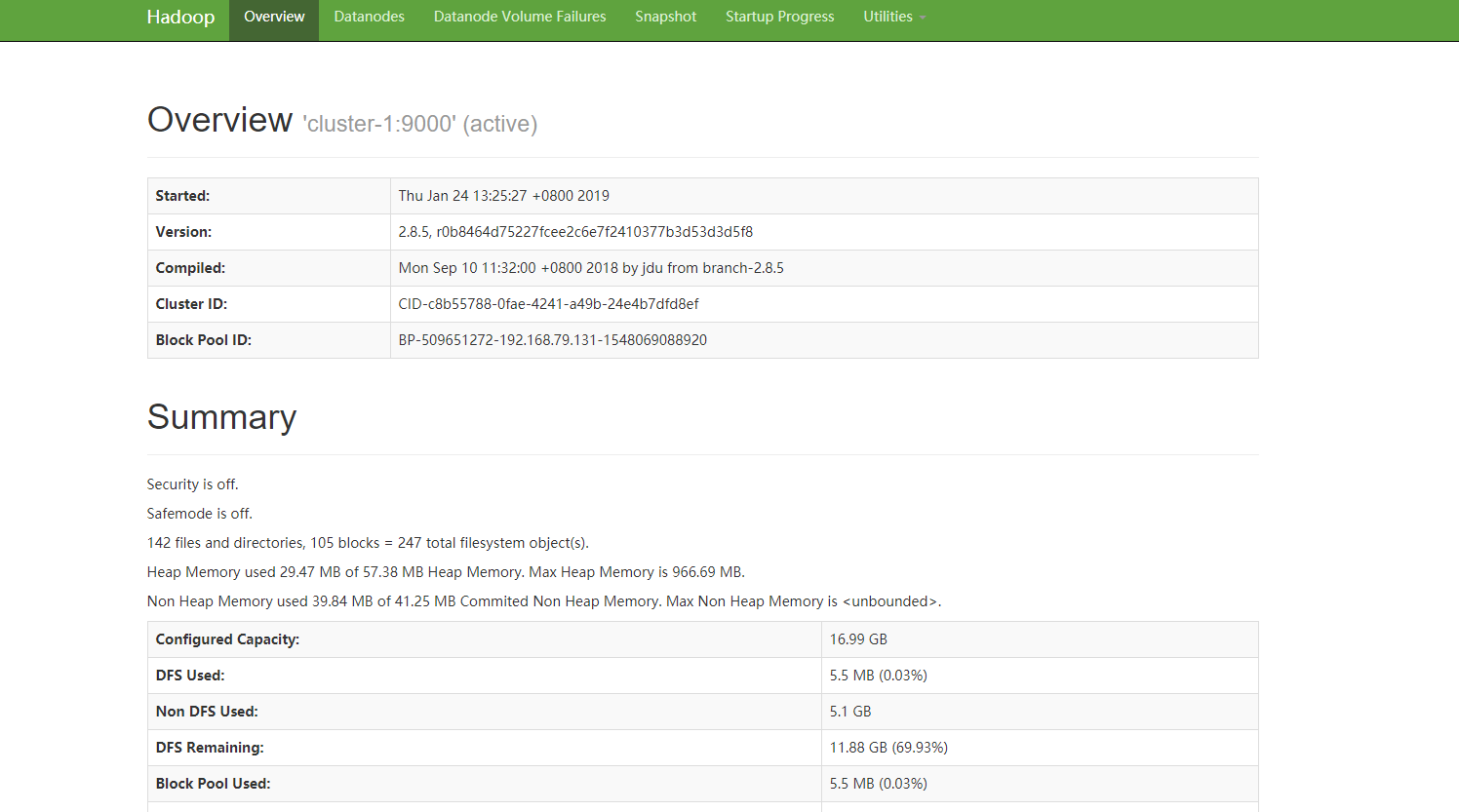

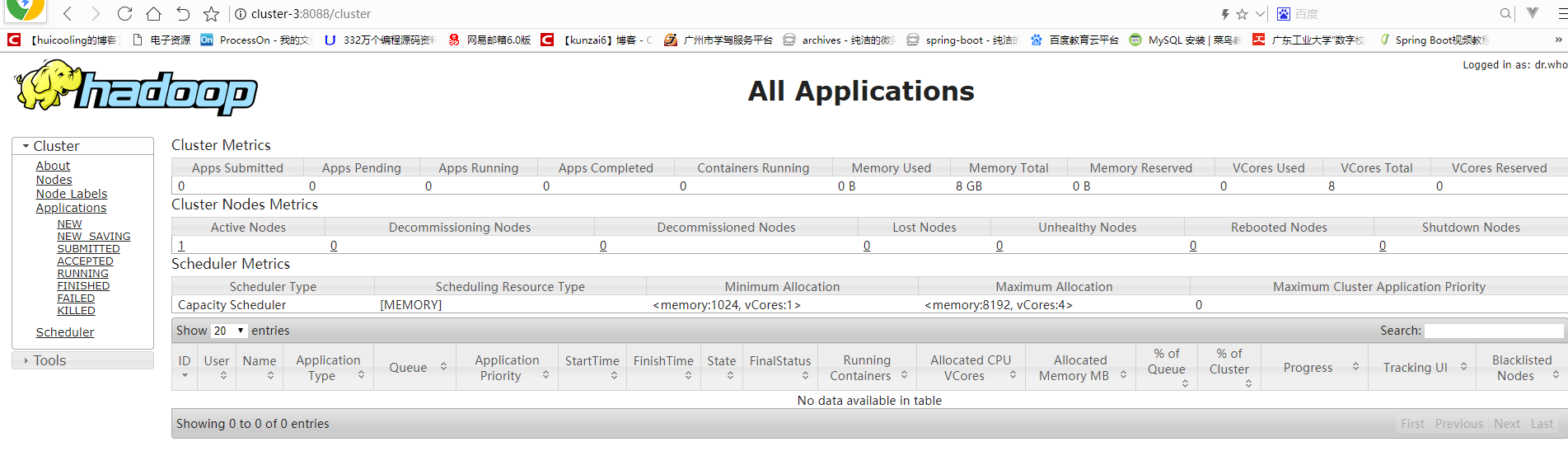

(4)启动成功后,可以使用浏览器登录:

cluster-3:50070 (HDFS管理界面)

cluster-3:8088 (MR管理界面)

如果不行的话就把防火墙关了,命令:systemctl stop firewalld,查看防火墙状态:systemctl status firewalld

HDFS管理界面:

MR管理界面:

五、配置免密登录,命令:ssh-keygen -t rsa,在进行如下操作

2

3

4

5

6

7

8

9

10

11

2[root@cluster-3 .ssh]# ll

3总用量 20

4-rw-r--r--. 1 root root 793 1月 21 22:34 authorized_keys

5-rw-------. 1 root root 1675 1月 21 21:55 id_rsa

6-rw-r--r--. 1 root root 396 1月 21 21:56 id_rsa.pub

7-rw-r--r--. 1 root root 396 1月 21 22:34 id_rsa.pub-1

8-rw-r--r--. 1 root root 712 1月 21 22:35 known_hosts

9[root@cluster-3 .ssh]#

10

11

如果没有文件authorized_keys,可以新建这个文件,最后将公钥密码加入进来即可,命令: cat id_rsa.pub >> authorized_keys

这样就可以免密登录了

六、hadoop 的shell命令

hadoop fs -fs / 查看跟目录下的文件

hadoop fs -mkdir aaa / 在跟目录下新建文件夹aaa

hadoop fs -put a.txt /aaa 上传文件a.txt到hdfs的aaa文件夹下

hadoop fs -rm -r /aaa/a.txt 删除aaa下的a.txt文件