问题:现使用redis版本较旧,为3.2.4,需升级为最新版本5.0.5,数据需迁移。

一、迁移前

1、存在旧版本 redis

2、部署新的redis版本,此处以单机做示例

# 创建redis目录和配置redis.conf

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

2cat /data/server/redis5/redis.conf

3

4daemonize no

5requirepass 123456

6protected-mode no

7port 7001

8dir /data/redis5

9pidfile redis.pid

10bind 0.0.0.0

11tcp-backlog 511

12timeout 0

13tcp-keepalive 0

14loglevel notice

15logfile /data/logs/redis5/redis.log

16databases 16

17save 900 1

18save 300 10

19save 60 10000

20stop-writes-on-bgsave-error yes

21rdbcompression yes

22rdbchecksum yes

23dbfilename dump.rdb

24slave-serve-stale-data yes

25slave-read-only yes

26repl-diskless-sync no

27repl-diskless-sync-delay 5

28repl-disable-tcp-nodelay no

29slave-priority 100

30maxmemory 1073741824

31appendonly yes

32appendfilename "appendonly.aof"

33appendfsync everysec

34no-appendfsync-on-rewrite no

35auto-aof-rewrite-percentage 100

36auto-aof-rewrite-min-size 64mb

37aof-load-truncated yes

38lua-time-limit 5000

39#cluster-enabled yes

40#cluster-config-file nodes.conf

41#cluster-node-timeout 15000

42#cluster-slave-validity-factor 10

43slowlog-log-slower-than 10000

44slowlog-max-len 128

45latency-monitor-threshold 0

46notify-keyspace-events ""

47hash-max-ziplist-entries 512

48hash-max-ziplist-value 64

49list-max-ziplist-entries 512

50list-max-ziplist-value 64

51set-max-intset-entries 512

52zset-max-ziplist-entries 128

53zset-max-ziplist-value 64

54hll-sparse-max-bytes 3000

55activerehashing yes

56client-output-buffer-limit normal 0 0 0

57client-output-buffer-limit slave 256mb 64mb 60

58client-output-buffer-limit pubsub 32mb 8mb 60

59hz 10

60

61

# 启动redis5容器

2

3

2docker run -itd --restart=always --name redis5 --net=host -v /data/logs/redis5:/data/logs/redis5:rw -v /data/server/redis5/data:/data/server/redis5:rw -v /data/server/redis5/redis.conf:/data/redis.conf redis:5.0.5 redis-server /data/redis.conf

3

# 检查redis5服务是否正常

二、redis数据同步/迁移【此处迁移使用阿里云工具 redis-shake 】

redis-shake是阿里云Redis&MongoDB团队开源的用于redis数据同步的工具,下载地址:点击下载最新版本

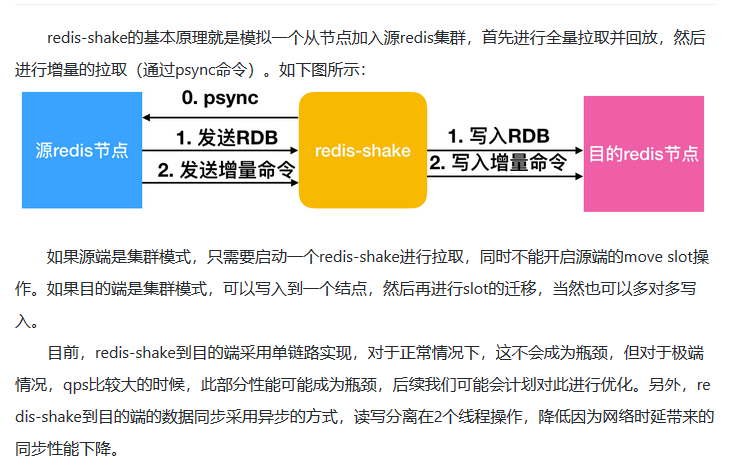

基本原理:

- 模拟一个从节点加入源redis集群,进行全量拉取并回放,再进行增量的拉取(sync命令)

基本功能:

- 恢复restore:将rdb文件恢复到目的数据库

- 备份dump:将源redis的全量数据通过rdb文件备份起来

- 解析decode:对rdb文件进行读取,并以json格式解析存储

- 同步sync:支持源redis和目的redis的数据同步,支持全量数据和增量数据迁移、云上到阿里云上、云下到云下、支持单节点/主从版本/集群版本 之间的互相同步。若源redis 是集群版本,可以启动一个redis-shake,从不同的db节点进行拉取,提示源redis不能开启 move slot功能;若目的端redis为集群版本,写入可以是1个或者多个db节点 。

- 同步rump:支持源redis和目的redis的数据同步,仅支持全量数据迁移,采用scan和restore命令进行迁移,支持不同云厂商的不同redis版本进行迁移。

支持:

- 支持2.8-5.0版本的同步。

- 支持codis。

- 支持云下到云上,云上到云上,云上到云下(阿里云目前支持主从版),其他云到阿里云等链路,帮助用户灵活构建混合云场景。

1、点击下载最新版本下载redis-shake,解压并修改配置,原配置如下,此处我们不需要这么多配置,可以修改剩下需要的即可

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

2# if you have any problem, please visit https://github.com/alibaba/RedisShake/wiki/FAQ

3

4# id

5id = redis-shake

6

7# log file,日志文件,不配置将打印到stdout (e.g. /var/log/redis-shake.log )

8log.file =

9# log level: "none", "error", "warn", "info", "debug", "all". default is "info". "debug" == "all"

10log.level = info

11# pid path,进程文件存储地址(e.g. /var/run/),不配置将默认输出到执行下面,

12# 注意这个是目录,真正的pid是`{pid_path}/{id}.pid`

13pid_path =

14

15# pprof port.

16system_profile = 9310

17# restful port, set -1 means disable, in `restore` mode RedisShake will exit once finish restoring RDB only if this value

18# is -1, otherwise, it'll wait forever.

19# restful port,查看metric端口, -1表示不启用,如果是`restore`模式,只有设置为-1才会在完成RDB恢复后退出,否则会一直block。

20http_profile = 9320

21

22# parallel routines number used in RDB file syncing. default is 64.

23# 启动多少个并发线程同步一个RDB文件。

24parallel = 32

25

26# source redis configuration.

27# used in `dump`, `sync` and `rump`.

28# source redis type, e.g. "standalone" (default), "sentinel" or "cluster".

29# 1. "standalone": standalone db mode.

30# 2. "sentinel": the redis address is read from sentinel.

31# 3. "cluster": the source redis has several db.

32# 4. "proxy": the proxy address, currently, only used in "rump" mode.

33# 源端redis的类型,支持standalone,sentinel,cluster和proxy四种模式,注意:目前proxy只用于rump模式。

34source.type = standalone

35# ip:port

36# the source address can be the following:

37# 1. single db address. for "standalone" type.

38# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

39# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type. e.g., 10.1.1.1:20331;10.1.1.2:20441.

40# 4. proxy address(used in "rump" mode only). for "proxy" type.

41# 源redis地址。对于sentinel或者开源cluster模式,输入格式为"master名字:拉取角色为master或者slave@sentinel的地址",别的cluster

42# 架构,比如codis, twemproxy, aliyun proxy等需要配置所有master或者slave的db地址。

43source.address = 192.168.16.133:6379

44# password of db/proxy. even if type is sentinel.

45source.password_raw = 123456

46# auth type, don't modify it

47source.auth_type = auth

48# tls enable, true or false. Currently, only support standalone.

49# open source redis does NOT support tls so far, but some cloud versions do.

50source.tls_enable = false

51# input RDB file.

52# used in `decode` and `restore`.

53# if the input is list split by semicolon(;), redis-shake will restore the list one by one.

54# 如果是decode或者restore,这个参数表示读取的rdb文件。支持输入列表,例如:rdb.0;rdb.1;rdb.2

55# redis-shake将会挨个进行恢复。

56source.rdb.input = local

57# the concurrence of RDB syncing, default is len(source.address) or len(source.rdb.input).

58# used in `dump`, `sync` and `restore`. 0 means default.

59# This is useless when source.type isn't cluster or only input is only one RDB.

60# 拉取的并发度,如果是`dump`或者`sync`,默认是source.address中db的个数,`restore`模式默认len(source.rdb.input)。

61# 假如db节点/输入的rdb有5个,但rdb.parallel=3,那么一次只会

62# 并发拉取3个db的全量数据,直到某个db的rdb拉取完毕并进入增量,才会拉取第4个db节点的rdb,

63# 以此类推,最后会有len(source.address)或者len(rdb.input)个增量线程同时存在。

64source.rdb.parallel = 0

65# for special cloud vendor: ucloud

66# used in `decode` and `restore`.

67# ucloud集群版的rdb文件添加了slot前缀,进行特判剥离: ucloud_cluster。

68source.rdb.special_cloud =

69

70# target redis configuration. used in `restore`, `sync` and `rump`.

71# the type of target redis can be "standalone", "proxy" or "cluster".

72# 1. "standalone": standalone db mode.

73# 2. "sentinel": the redis address is read from sentinel.

74# 3. "cluster": open source cluster (not supported currently).

75# 4. "proxy": proxy layer ahead redis. Data will be inserted in a round-robin way if more than 1 proxy given.

76# 目的redis的类型,支持standalone,sentinel,cluster和proxy四种模式。

77target.type = standalone

78# ip:port

79# the target address can be the following:

80# 1. single db address. for "standalone" type.

81# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.

82# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type.

83# 4. proxy address(used in "rump" mode only). for "proxy" type.

84target.address = 192.168.16.133:7001

85# password of db/proxy. even if type is sentinel.

86target.password_raw = 123456

87# auth type, don't modify it

88target.auth_type = auth

89# all the data will be written into this db. < 0 means disable.

90target.db = -1

91# tls enable, true or false. Currently, only support standalone.

92# open source redis does NOT support tls so far, but some cloud versions do.

93target.tls_enable = false

94# output RDB file prefix.

95# used in `decode` and `dump`.

96# 如果是decode或者dump,这个参数表示输出的rdb前缀,比如输入有3个db,那么dump分别是:

97# ${output_rdb}.0, ${output_rdb}.1, ${output_rdb}.2

98target.rdb.output = local_dump

99# some redis proxy like twemproxy doesn't support to fetch version, so please set it here.

100# e.g., target.version = 4.0

101target.version =

102

103# use for expire key, set the time gap when source and target timestamp are not the same.

104# 用于处理过期的键值,当迁移两端不一致的时候,目的端需要加上这个值

105fake_time =

106

107# force rewrite when destination restore has the key

108# used in `restore`, `sync` and `rump`.

109# 当源目的有重复key,是否进行覆写

110rewrite = true

111

112# filter db, key, slot, lua.

113# filter db.

114# used in `restore`, `sync` and `rump`.

115# e.g., "0;5;10" means match db0, db5 and db10.

116# at most one of `filter.db.whitelist` and `filter.db.blacklist` parameters can be given.

117# if the filter.db.whitelist is not empty, the given db list will be passed while others filtered.

118# if the filter.db.blacklist is not empty, the given db list will be filtered while others passed.

119# all dbs will be passed if no condition given.

120# 指定的db被通过,比如0;5;10将会使db0, db5, db10通过, 其他的被过滤

121filter.db.whitelist =

122# 指定的db被过滤,比如0;5;10将会使db0, db5, db10过滤,其他的被通过

123filter.db.blacklist =

124# filter key with prefix string. multiple keys are separated by ';'.

125# e.g., "abc;bzz" match let "abc", "abc1", "abcxxx", "bzz" and "bzzwww".

126# used in `restore`, `sync` and `rump`.

127# at most one of `filter.key.whitelist` and `filter.key.blacklist` parameters can be given.

128# if the filter.key.whitelist is not empty, the given keys will be passed while others filtered.

129# if the filter.key.blacklist is not empty, the given keys will be filtered while others passed.

130# all the namespace will be passed if no condition given.

131# 支持按前缀过滤key,只让指定前缀的key通过,分号分隔。比如指定abc,将会通过abc, abc1, abcxxx

132filter.key.whitelist =

133# 支持按前缀过滤key,不让指定前缀的key通过,分号分隔。比如指定abc,将会阻塞abc, abc1, abcxxx

134filter.key.blacklist =

135# filter given slot, multiple slots are separated by ';'.

136# e.g., 1;2;3

137# used in `sync`.

138# 指定过滤slot,只让指定的slot通过

139filter.slot =

140# filter lua script. true means not pass. However, in redis 5.0, the lua

141# converts to transaction(multi+{commands}+exec) which will be passed.

142# 控制不让lua脚本通过,true表示不通过

143filter.lua = false

144

145# big key threshold, the default is 500 * 1024 * 1024 bytes. If the value is bigger than

146# this given value, all the field will be spilt and write into the target in order. If

147# the target Redis type is Codis, this should be set to 1, please checkout FAQ to find

148# the reason.

149# 正常key如果不大,那么都是直接调用restore写入到目的端,如果key对应的value字节超过了给定

150# 的值,那么会分批依次一个一个写入。如果目的端是Codis,这个需要置为1,具体原因请查看FAQ。

151big_key_threshold = 524288000

152

153# use psync command.

154# used in `sync`.

155# 默认使用sync命令,启用将会使用psync命令

156psync = false

157

158# enable metric

159# used in `sync`.

160# 是否启用metric

161metric = true

162# print in log

163# 是否将metric打印到log中

164metric.print_log = false

165

166# sender information.

167# sender flush buffer size of byte.

168# used in `sync`.

169# 发送缓存的字节长度,超过这个阈值将会强行刷缓存发送

170sender.size = 104857600

171# sender flush buffer size of oplog number.

172# used in `sync`. flush sender buffer when bigger than this threshold.

173# 发送缓存的报文个数,超过这个阈值将会强行刷缓存发送,对于目的端是cluster的情况,这个值

174# 的调大将会占用部分内存。

175sender.count = 4096

176# delay channel size. once one oplog is sent to target redis, the oplog id and timestamp will also

177# stored in this delay queue. this timestamp will be used to calculate the time delay when receiving

178# ack from target redis.

179# used in `sync`.

180# 用于metric统计时延的队列

181sender.delay_channel_size = 65535

182

183# enable keep_alive option in TCP when connecting redis.

184# the unit is second.

185# 0 means disable.

186# TCP keep-alive保活参数,单位秒,0表示不启用。

187keep_alive = 0

188

189# used in `rump`.

190# number of keys captured each time. default is 100.

191# 每次scan的个数,不配置则默认100.

192scan.key_number = 50

193# used in `rump`.

194# we support some special redis types that don't use default `scan` command like alibaba cloud and tencent cloud.

195# 有些版本具有特殊的格式,与普通的scan命令有所不同,我们进行了特殊的适配。目前支持腾讯云的集群版"tencent_cluster"

196# 和阿里云的集群版"aliyun_cluster"。

197scan.special_cloud =

198# used in `rump`.

199# we support to fetching data from given file which marks the key list.

200# 有些云版本,既不支持sync/psync,也不支持scan,我们支持从文件中进行读取所有key列表并进行抓取:一行一个key。

201scan.key_file =

202

203# limit the rate of transmission. Only used in `rump` currently.

204# e.g., qps = 1000 means pass 1000 keys per second. default is 500,000(0 means default)

205qps = 200000

206

207# ----------------splitter----------------

208# below variables are useless for current open source version so don't set.

209

210# replace hash tag.

211# used in `sync`.

212replace_hash_tag = false

213

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

2[root@bb tmp]# cd redis-shake-1.6.23

3[root@bb redis-shake-1.6.23]# ls

4ChangeLog hypervisor redis-shake.conf redis-shake.darwin redis-shake.linux redis-shake.pid redis-shake.windows start.sh stop.sh

5[root@bb-uat-db0 redis-shake-1.6.23]# cat redis-shake.conf

6

7id = redis-shake

8log.level = info

9system_profile = 9310

10http_profile = 9320

11parallel = 32

12source.type = standalone

13source.address = 192.168.16.133:6379

14source.tls_enable = false

15source.rdb.input = local

16source.rdb.parallel = 0

17target.type = standalone

18target.address = 192.168.16.133:7001

19target.password_raw = 123456

20target.auth_type = auth

21target.db = -1

22target.tls_enable = false

23target.rdb.output = local_dump

24rewrite = true

25filter.lua = false

26big_key_threshold = 524288000

27psync = false

28metric = true

29metric.print_log = false

30sender.size = 104857600

31sender.count = 4096

32sender.delay_channel_size = 65535

33keep_alive = 0

34scan.key_number = 50

35qps = 200000

36replace_hash_tag = false

37

38

2、开始数据迁移,当出现 【sync rdb done】字样时,表示数据同步完成,之后开始进行数据的增量同步。

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

22019/12/18 11:16:39 [INFO] input password is empty, skip auth address[192.168.16.133:6379] with type[].

32019/12/18 11:16:39 [INFO] input password is empty, skip auth address[192.168.16.133:6379] with type[].

42019/12/18 11:16:39 [INFO] source rdb[192.168.16.133:6379] checksum[yes]

52019/12/18 11:16:39 [WARN]

6______________________________

7\ \ _ ______ |

8 \ \ / \___-=O'/|O'/__|

9 \ RedisShake, here we go !! \_______\ / | / )

10 / / '/-==__ _/__|/__=-| -GM

11 / Alibaba Cloud / * \ | |

12/ / (o)

13------------------------------

14if you have any problem, please visit https://github.com/alibaba/RedisShake/wiki/FAQ

15

162019/12/18 11:16:39 [INFO] redis-shake configuration: {"Id":"redis-shake","LogFile":"","LogLevel":"info","SystemProfile":9310,"HttpProfile":9320,"Parallel":32,"SourceType":"standalone","SourceAddress":"192.168.16.133:6379","SourcePasswordRaw":"","SourcePasswordEncoding":"","SourceAuthType":"","SourceTLSEnable":false,"SourceRdbInput":["local"],"SourceRdbParallel":1,"SourceRdbSpecialCloud":"","TargetAddress":"192.168.16.133:7001","TargetPasswordRaw":"bg8TjsqfaNIAxTjStlx7glEbLGxumy","TargetPasswordEncoding":"","TargetDBString":"-1","TargetAuthType":"auth","TargetType":"standalone","TargetTLSEnable":false,"TargetRdbOutput":"local_dump","TargetVersion":"5.0.5","FakeTime":"","Rewrite":true,"FilterDBWhitelist":[],"FilterDBBlacklist":[],"FilterKeyWhitelist":[],"FilterKeyBlacklist":[],"FilterSlot":[],"FilterLua":false,"BigKeyThreshold":524288000,"Psync":false,"Metric":true,"MetricPrintLog":false,"SenderSize":104857600,"SenderCount":4096,"SenderDelayChannelSize":65535,"KeepAlive":0,"PidPath":"","ScanKeyNumber":50,"ScanSpecialCloud":"","ScanKeyFile":"","Qps":200000,"NCpu":0,"HeartbeatUrl":"","HeartbeatInterval":10,"HeartbeatExternal":"","HeartbeatNetworkInterface":"","ReplaceHashTag":false,"ExtraInfo":false,"SockFileName":"","SockFileSize":0,"FilterKey":null,"FilterDB":"","SourceAddressList":["192.168.16.133:6379"],"TargetAddressList":["192.168.16.133:7001"],"SourceVersion":"3.2.4","HeartbeatIp":"127.0.0.1","ShiftTime":0,"TargetReplace":true,"TargetDB":-1,"Version":"bugfix-1.6.23,b40bd294be9892fd9319f88b6b7e7ea978b2cb62,go1.10.1,2019-11-28_20:34:03","Type":"sync"}

172019/12/18 11:16:39 [INFO] routine[0] starts syncing data from 192.168.16.133:6379 to [192.168.16.133:7001] with http[9321]

182019/12/18 11:16:39 [INFO] input password is empty, skip auth address[192.168.16.133:6379] with type[].

192019/12/18 11:16:39 [INFO] dbSyncer[0] rdb file size = 33614

202019/12/18 11:16:39 [INFO] Aux information key:redis-ver value:3.2.4

212019/12/18 11:16:39 [INFO] Aux information key:redis-bits value:64

222019/12/18 11:16:39 [INFO] Aux information key:ctime value:1576638999

232019/12/18 11:16:39 [INFO] Aux information key:used-mem value:6425728

242019/12/18 11:16:39 [INFO] db_size:581 expire_size:2

252019/12/18 11:16:39 [INFO] dbSyncer[0] total = 32.826KB - 32.826KB [100%] entry=581

262019/12/18 11:16:39 [INFO] dbSyncer[0] sync rdb done

272019/12/18 11:16:39 [WARN] dbSyncer[0] GetFakeSlaveOffset not enable when psync == false

282019/12/18 11:16:39 [INFO] dbSyncer[0] FlushEvent:IncrSyncStart Id:redis-shake

292019/12/18 11:16:40 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

302019/12/18 11:16:41 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

312019/12/18 11:16:42 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

322019/12/18 11:16:43 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

332019/12/18 11:16:44 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

342019/12/18 11:16:45 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

352019/12/18 11:16:46 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

362019/12/18 11:16:47 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

372019/12/18 11:16:48 [INFO] dbSyncer[0] sync: +forwardCommands=1 +filterCommands=0 +writeBytes=4

382019/12/18 11:16:49 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

392019/12/18 11:16:50 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

402019/12/18 11:16:51 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

412019/12/18 11:16:52 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

422019/12/18 11:16:53 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

432019/12/18 11:16:54 [INFO] dbSyncer[0] sync: +forwardCommands=0 +filterCommands=0 +writeBytes=0

442019/12/18 11:16:55 [INFO] dbSyncer[0] sync: +forwardCommands=3 +filterCommands=0 +writeBytes=1022

45

46

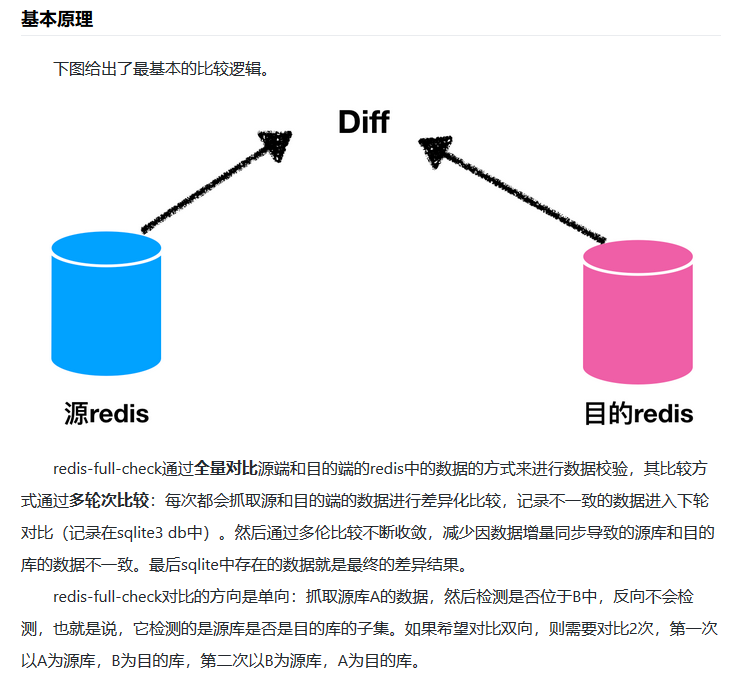

三、进行数据校验【此处使用阿里云工具 redis-full-check】

redis-full-check是阿里云Redis&MongoDB团队开源的用于校验2个redis数据是否一致的工具,通常用于redis数据迁移(redis-shake)后正确性的校验。

支持:单节点、主从版、集群版、带proxy的云上集群版(阿里云)之间的同构或者异构对比,版本支持2.x-5.x。

1、下载redis-full-check最新版本,解压

2

3

4

5

6

7

2[root@bb tmp]# cd redis-full-check-1.4.7

3[root@bb redis-full-check-1.4.7]# ls

4ChangeLog redis-full-check

5

6

7

2、校验源redis、校验redis的数据

如果希望对比双向,则需要对比2次,第一次以A为源库,B为目的库,第二次以B为源库,A为目的库。

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

2[root@bb redis-full-check-1.4.7]# ./redis-full-check --help

3Usage:

4 redis-full-check [OPTIONS]

5

6Application Options:

7 -s, --source=SOURCE Set host:port of source redis. If db type is cluster, split by semicolon(;'), e.g., 10.1.1.1:1000;10.2.2.2:2000;10.3.3.3:3000.

8 We also support auto-detection, so "master@10.1.1.1:1000" or "slave@10.1.1.1:1000" means choose master or slave. Only need to

9 give a role in the master or slave.

10 -p, --sourcepassword=Password Set source redis password

11 --sourceauthtype=AUTH-TYPE useless for opensource redis, valid value:auth/adminauth (default: auth)

12 --sourcedbtype= 0: db, 1: cluster 2: aliyun proxy, 3: tencent proxy (default: 0)

13 --sourcedbfilterlist= db white list that need to be compared, -1 means fetch all, "0;5;15" means fetch db 0, 5, and 15 (default: -1)

14 -t, --target=TARGET Set host:port of target redis. If db type is cluster, split by semicolon(;'), e.g., 10.1.1.1:1000;10.2.2.2:2000;10.3.3.3:3000.

15 We also support auto-detection, so "master@10.1.1.1:1000" or "slave@10.1.1.1:1000" means choose master or slave. Only need to

16 give a role in the master or slave.

17 -a, --targetpassword=Password Set target redis password

18 --targetauthtype=AUTH-TYPE useless for opensource redis, valid value:auth/adminauth (default: auth)

19 --targetdbtype= 0: db, 1: cluster 2: aliyun proxy 3: tencent proxy (default: 0)

20 --targetdbfilterlist= db white list that need to be compared, -1 means fetch all, "0;5;15" means fetch db 0, 5, and 15 (default: -1)

21 -d, --db=Sqlite3-DB-FILE sqlite3 db file for store result. If exist, it will be removed and a new file is created. (default: result.db)

22 --result=FILE store all diff result into the file, format is 'db diff-type key field'

23 --comparetimes=COUNT Total compare count, at least 1. In the first round, all keys will be compared. The subsequent rounds of the comparison will be

24 done on the previous results. (default: 3)

25 -m, --comparemode= compare mode, 1: compare full value, 2: only compare value length, 3: only compare keys outline, 4: compare full value, but

26 only compare value length when meets big key (default: 2)

27 --id= used in metric, run id, useless for open source (default: unknown)

28 --jobid= used in metric, job id, useless for open source (default: unknown)

29 --taskid= used in metric, task id, useless for open source (default: unknown)

30 -q, --qps= max batch qps limit: e.g., if qps is 10, full-check fetches 10 * $batch keys every second (default: 15000)

31 --interval=Second The time interval for each round of comparison(Second) (default: 5)

32 --batchcount=COUNT the count of key/field per batch compare, valid value [1, 10000] (default: 256)

33 --parallel=COUNT concurrent goroutine number for comparison, valid value [1, 100] (default: 5)

34 --log=FILE log file, if not specified, log is put to console

35 --loglevel=LEVEL log level: 'debug', 'info', 'warn', 'error', default is 'info'

36 --metric print metric in log

37 --bigkeythreshold=COUNT

38 -f, --filterlist=FILTER if the filter list isn't empty, all elements in list will be synced. The input should be split by '|'. The end of the string is

39 followed by a * to indicate a prefix match, otherwise it is a full match. e.g.: 'abc*|efg|m*' matches 'abc', 'abc1', 'efg',

40 'm', 'mxyz', but 'efgh', 'p' aren't'

41 --systemprofile=SYSTEM-PROFILE port that used to print golang inner head and stack message (default: 20445)

42 -v, --version

43

44Help Options:

45 -h, --help Show this help message

46

47# 校验2个redis的数据,出现 all finish successfully 则表示成功

48[root@bb redis-full-check-1.4.7]# ./redis-full-check -s 192.168.16.133:6379 -t 192.168.16.133:7001 -a 123456

49[INFO 2019-12-18-11:18:52 main.go:65]: init log success

50[INFO 2019-12-18-11:18:52 main.go:164]: configuration: {192.168.16.133:6379 auth 0 -1 192.168.16.133:7001 bg8TjsqfaNIAxTjStlx7glEbLGxumy auth 0 -1 result.db 3 2 unknown unknown unknown 15000 5 256 5 false 16384 20445 false}

51[INFO 2019-12-18-11:18:52 main.go:166]: ---------

52[INFO 2019-12-18-11:18:52 full_check.go:238]: sourceDbType=0, p.sourcePhysicalDBList=[meaningless]

53[INFO 2019-12-18-11:18:52 full_check.go:243]: db=0:keys=581

54[INFO 2019-12-18-11:18:52 full_check.go:253]: ---------------- start 1th time compare

55[INFO 2019-12-18-11:18:52 full_check.go:278]: start compare db 0

56[INFO 2019-12-18-11:18:52 scan.go:20]: build connection[source redis addr: [192.168.16.133:6379]]

57[INFO 2019-12-18-11:18:53 full_check.go:203]: stat:

58times:1, db:0, dbkeys:581, finish:33%, finished:true

59KeyScan:{581 581 0}

60

61[INFO 2019-12-18-11:18:53 full_check.go:250]: wait 5 seconds before start

62[INFO 2019-12-18-11:18:58 full_check.go:253]: ---------------- start 2th time compare

63[INFO 2019-12-18-11:18:58 full_check.go:278]: start compare db 0

64[INFO 2019-12-18-11:18:58 full_check.go:203]: stat:

65times:2, db:0, finished:true

66KeyScan:{0 0 0}

67

68[INFO 2019-12-18-11:18:58 full_check.go:250]: wait 5 seconds before start

69[INFO 2019-12-18-11:19:03 full_check.go:253]: ---------------- start 3th time compare

70[INFO 2019-12-18-11:19:03 full_check.go:278]: start compare db 0

71[INFO 2019-12-18-11:19:03 full_check.go:203]: stat:

72times:3, db:0, finished:true

73KeyScan:{0 0 0}

74

75[INFO 2019-12-18-11:19:03 full_check.go:328]: --------------- finished! ----------------

76all finish successfully, totally 0 key(s) and 0 field(s) conflict

77

78# 进行第二次校验

79[root@bb0 redis-full-check-1.4.7]# ./redis-full-check -s 192.168.16.133:7001 -p 123456 -t 192.168.16.133:6379

80[INFO 2019-12-18-14:30:49 main.go:65]: init log success

81[INFO 2019-12-18-14:30:49 main.go:164]: configuration: {192.168.16.133:7001 bg8TjsqfaNIAxTjStlx7glEbLGxumy auth 0 -1 192.168.16.133:6379 auth 0 -1 result.db 3 2 unknown unknown unknown 15000 5 256 5 false 16384 20445 false}

82[INFO 2019-12-18-14:30:49 main.go:166]: ---------

83[INFO 2019-12-18-14:30:49 full_check.go:238]: sourceDbType=0, p.sourcePhysicalDBList=[meaningless]

84[INFO 2019-12-18-14:30:49 full_check.go:243]: db=0:keys=581

85[INFO 2019-12-18-14:30:49 full_check.go:253]: ---------------- start 1th time compare

86[INFO 2019-12-18-14:30:49 full_check.go:278]: start compare db 0

87[INFO 2019-12-18-14:30:49 scan.go:20]: build connection[source redis addr: [192.168.16.133:7001]]

88[INFO 2019-12-18-14:30:50 full_check.go:203]: stat:

89times:1, db:0, dbkeys:581, finish:33%, finished:true

90KeyScan:{581 581 0}

91

92[INFO 2019-12-18-14:30:50 full_check.go:250]: wait 5 seconds before start

93[INFO 2019-12-18-14:30:55 full_check.go:253]: ---------------- start 2th time compare

94[INFO 2019-12-18-14:30:55 full_check.go:278]: start compare db 0

95[INFO 2019-12-18-14:30:55 full_check.go:203]: stat:

96times:2, db:0, finished:true

97KeyScan:{0 0 0}

98

99[INFO 2019-12-18-14:30:55 full_check.go:250]: wait 5 seconds before start

100[INFO 2019-12-18-14:31:00 full_check.go:253]: ---------------- start 3th time compare

101[INFO 2019-12-18-14:31:00 full_check.go:278]: start compare db 0

102[INFO 2019-12-18-14:31:00 full_check.go:203]: stat:

103times:3, db:0, finished:true

104KeyScan:{0 0 0}

105

106[INFO 2019-12-18-14:31:00 full_check.go:328]: --------------- finished! ----------------

107all finish successfully, totally 0 key(s) and 0 field(s) conflict

108

109

至此,redis-3.2.4 至redis-5.0.5 数据迁移结束。

工具参考文档:

https://yq.aliyun.com/articles/691794

https://yq.aliyun.com/articles/690463?spm=a2c4e.11153940.0.0.50c53f76HMcVeh